Chapter 43: Moment Generating Functions

Learning Objectives

- Learn the definition of a moment-generating function.

- Find the moment-generating function of a binomial random variable.

- Use a moment-generating function to find the mean and variance of a random variable.

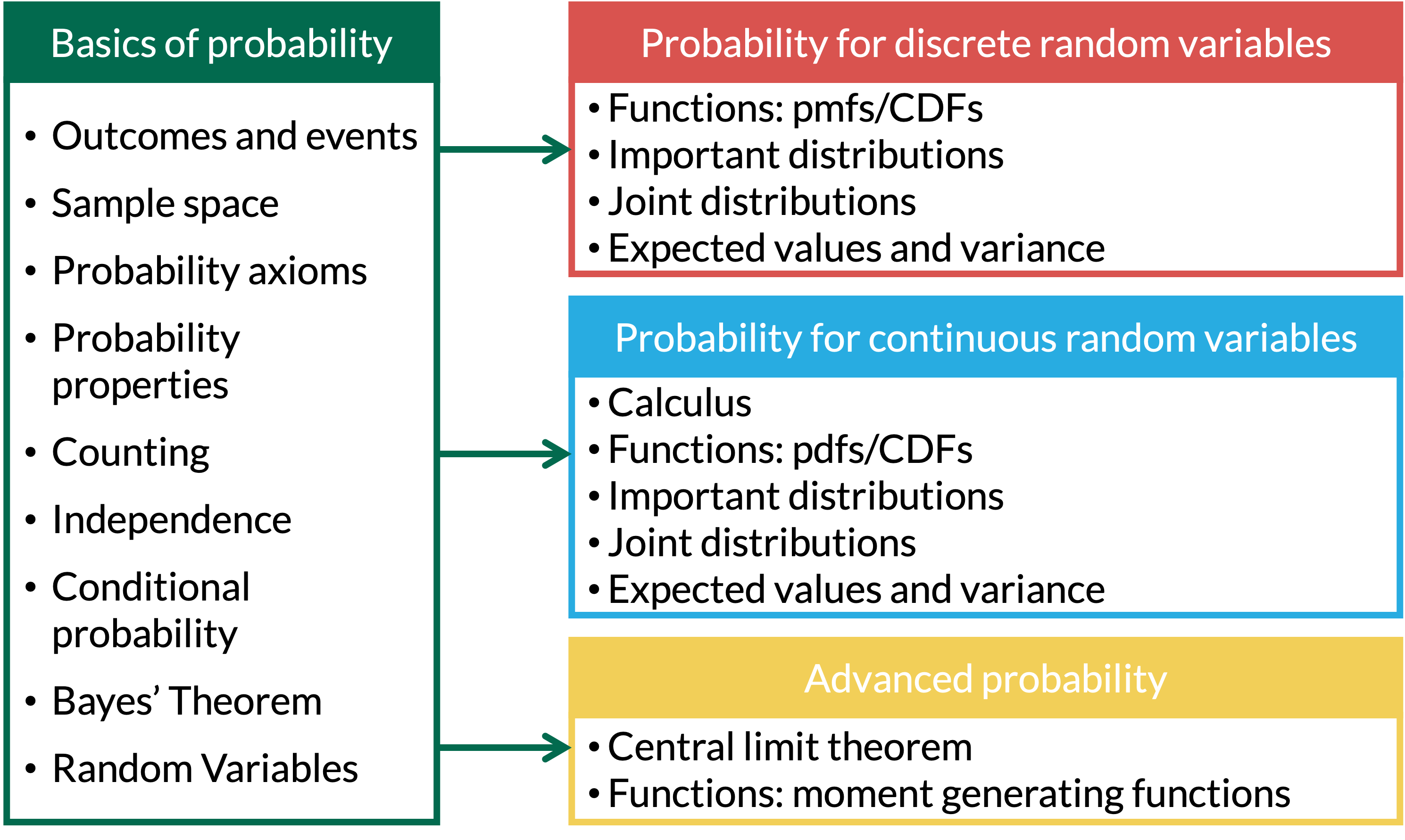

Where are we?

What are moments?

Definition 1

The \(j^{th}\) moment of a r.v. \(X\) is \(\mathbb{E}[X^j]\)

Example 1

\(1^{st}-4^{th}\) moments

What is a moment generating function (mgf)??

Definition 3

If \(X\) is a r.v., then the moment generating function (mgf) associated with \(X\) is: \[M_X(t)= \mathbb{E}[e^{tX}]\]

Remarks

For a discrete r.v., the mgf of \(X\) is \[M_X(t)= \mathbb{E}[e^{tX}]=\sum_{all \ x}e^{tx}p_X(x)\]

For a continuous r.v., the mgf of \(X\) is \[M_X(t)= \mathbb{E}[e^{tX}]=\int_{-\infty}^{\infty}e^{tx}f_X(x)dx\]

- The mgf \(M_X(t)\) is a function of \(t\), not of \(X\), and it might not be defined (i.e. finite) for all values of \(t\). We just need it to be defined for \(t=0\).

Example

Example 4

What is \(M_X(t)\) for \(t=0\)?

Theorem

Theorem 5

The moment generating function uniquely specifies a probability distribution.

Theorem 6

\[\mathbb{E}[X^r] = M_X^{(r)}(0)\]

\((r)\) in this equation is the \(r\)th derivative with respect to \(t\)

- When \(r=1\), we are taking the first derivative

- When \(r=4\), we are taking the fourth derivative

Using the mgf to uniquely describe a probability distribution

Example 7

Let \(X \sim Poisson(\lambda)\)

Find the mgf of \(X\)

Find \(\mathbb{E}[X]\)

Find \(Var(X)\)

Theorem

Remark: Finding the mean and variance is sometimes easier with the following trick

Theorem 8

Let \(R_X(t) = \ln[M_X(t)]\). Then,

\[\mu = \mathbb{E}[X] = R_X'(0) \text{, and}\] \[\sigma^2 = Var(X) = R_X''(0)\]

Proof.

Using \(R_X(t)\) to uniquely describe a probability distribution

Example 9

Let \(X \sim Poisson(\lambda)\).

Find \(\mathbb{E}[X]\) using \(R_X(t)\)

Find \(Var(X)\) using \(R_X(t)\)

Using the mgf to uniquely describe the standard normal distribution

Example 10

Let \(Z\) be a standard normal random variable, i.e. \(Z \sim N(0,1)\).

Find the mgf of \(Z\)

Find \(\mathbb{E}[Z]\)

Find \(Var(Z)\)

Mgf’s of sums of independent RV’s

Theorem 9

If \(X\) and \(Y\) are independent RV’s with respective mgf’s \(M_X(t)\) and \(M_Y(t)\), then

\[M_{X+Y}(t) = E[e^{t(X+Y)}] = E[e^{tX} e^{tY}] = E[e^{tX}]E[e^{tY}]=M_{X}(t)M_{Y}(t)\]

Main takeaways

Mgf’s are a purely mathematically definition

- We can’t really relate it to our real world analysis

They are helpful mathematically because they are unique to a probability distribution

- We can find the unique mgf from for a probability distribution

- And we can find a distribution from an mgf

Mgf’s can sometimes make it easier to find the mean and variance of an RV

Mgf’s are most helpful when we are finding a joint distribution that is a sum or transformation of two RV’s

- Make the calculation easier!

Mgf’s are often used to prove certain distribution are sums of other ones!