Week 4

- On Monday, 1/29, we will be in RLSB 3A003 B

- On Wednesday, 2/1, we will be in RLSB 3A003 A

Resources

Below is a table with links to resources. Icons in orange mean there is an available file link.

| Lesson | Topic | Slides | Annotated Slides | Recording |

|---|---|---|---|---|

| 6 | SLR: Model Diagnostics 1 | |||

| 7 | SLR: Model Diagnostics 2 |

On the Horizon

- Homework 2 due 2/1

- Lab 2 due 2/8

Class Exit Tickets

Announcements

Monday 1/29

Quiz - no announcements

Wednesday 1/31

Vote in Exit ticket: Emailing me questions privately vs. posting on Slack

I am proposing a new class policy to anonymously post any emailed questions on Slack

I know it can be scary to pose a question to the whole class

Even questions about logistics can be answered by anyone and endorsed by me

- Will help get answers faster

Sometimes I see your email, but don’t have time carved out for my emails until later in the day or the next day

Lab 1 IS ALMOST GRADED!!

Lab 2 IS POSTED!!

Muddiest Points

1. Equality of the residuals - what’s the bias refer in a residual plot? Is that suggesting a non linear relationship between two variables?

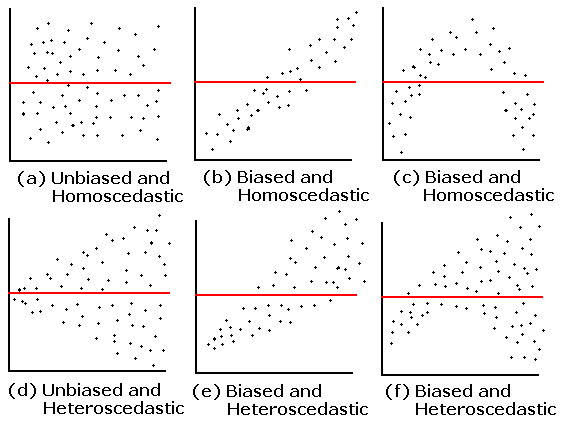

Here is the plot that this question is referring to:

The answer is already in the question! The residual plot can also be used to look at linearity! The above plots that say “unbiased” mean they do not follow the linearity assumption.

2. QQ Plot: What is it? And can you explain the axes, meaning of “quantiles”, and why assuming normality would result in a straight line?

I cannot answer this question better than this video! They go through a smaller dataset of gene expression values and how to make a QQ plot from the data. Remember, our QQ plot is of our residual values!!

3. I’m still a little confused on how to determine if a dataset has a normal distribution. Feels like a subjective decision.

First thing that I want to address: when we are talking about normality, we are not determining if the dataset follows a normal distribution. We are determining if the fitted model violates the normality assumption that we need to use in our population model. We do this by seeing if the fitted residuals follow a normal distribution. I just want to draw attention to this. There is very particular language being used here.

Second thing… Yes! These diagnostic tools are somewhat subjective. You are welcome to use the Shapiro-Wilk test every time you look at a QQ plot! I realize a test with a conclusion might feel more objective and comfortable as we are learning about the model diagnostics. I suggest trying to make a conclusion visually with a QQ plot, then see if it matches the Shapiro-Wilk test. Remember, even in the Shapiro-Wilk test, the null hypothesis is that the fitted residuals come from a normal distribution. So we have to work to disprove that. You can come to the QQ plot with that same prior. If the QQ plot gives blantent evidence that the fitted residuals are not normally distributed, then we violate the assumption.

We’ll keep practicing! As we keep going through regression, we’ll realize that model building is very much an art! There is no one answer in statistics!

4. What are the small nuances in interpreting the normality through a QQ plot?

Thanks for this question! This helped me realize that I was not articulating very well some of my more subconscious thoughts in a QQ plot.

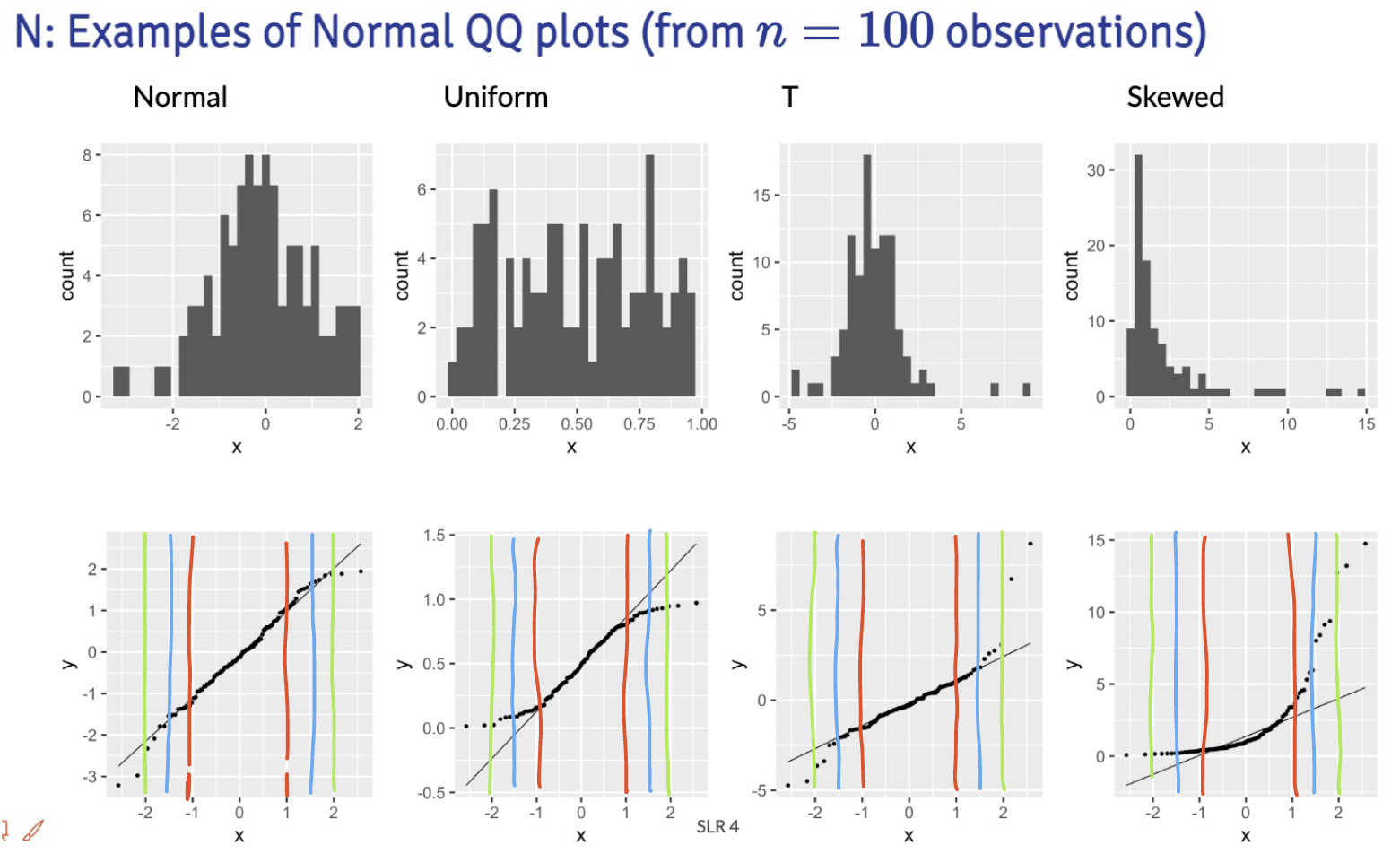

Below are the distribution samples ant their QQ plots from lecture:

I drew red, blue and green lines to bracket certain areas of the plots. I basically start by looking within the red brackets. Do all the points seem to stay close to the black line? If this doesn’t hold for the red bracketed area, then I would say our fitted residuals are not normal. Then I look at the area from the red lines to the blue lines. This is less definite, but if the points don’t seem to stay close to the black line, then I’d say our fitted residuals are not normal. Then I’d look at the are between the blue and green line. If the points aren’t close to the black line, then I am likely okay with it and would NOT make the conclusion that the fitted residuals are NOT normal. Notice, that I am not saying I call them normal. They seem to not violate the normal assumption.

Extra note: The t-distribution is similar to a normal, but it includes larger tails. This is to adjust the normal distribution when our sample size of data is small. However, our assumption aims for fitted residuals to follow a normal distribution. We can be a little more flexible with the QQ plot when we have a smaller sample size, but we should not aim for a t-distribution. Both the normal and t-distribution samples “passed” my normality assessment.

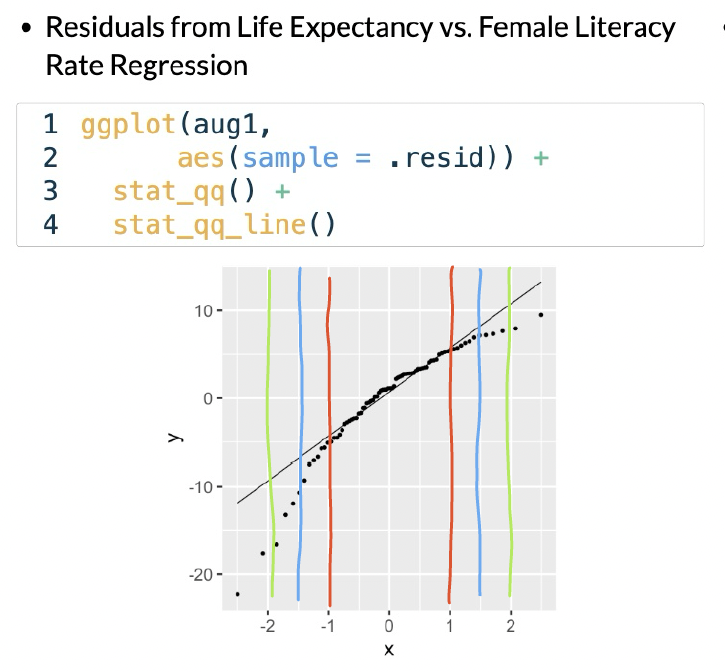

We can check out the example:

In this example, I would say that the fitted residuals violate the normal assumption. Notice that we have points off the black line between the red and blue lines. And even within the red lines, we have some curve. This is okay for our example! That’s because we have not yet included other (likely needed) variables in the model. And what does that mean? The other variables in the model will help explain MORE variance in our Y, which would alter the fitted residuals!!

Draw the red, blue, and green lines on the other QQ plot slides. See what you find, especially when we have different sample sizes!

Still compiling these muddy points:

Explain formula for internally standardized residuals. 2. How do we determine WHAT the source of the issue is for an outlier… i.e. “outlier detection”. Is it just highly situational most times? 3. Leverage (h sub i) what is the significance of it? What does it “tell us” as values change?

“cubic or square transformations must include original X, don’t do this for Y”

The transformations of the X and Y started to feel really abstract and kinda confusing

the relationship between what we’re learning about models and how we’ll actually apply that knowledge to a figure in a report, for example

I understand the reasoning for transforming to make the data to fit the LINE assumptions, but if it makes it hard to interpret, why do we do it?

Hi and Hat metrics and not knowing the magnitude or reason for it was confusing.

what are some other real life examples of influential points besides human error. Can they appear more organically

I’m a little confused in the section about the power ladder – we’re supposed to be looking at the skew of the distribution of the residuals, but the examples shown were of different distributions (x alone, y alone, and x vs y). What should we be looking at to determine what transform, if any, to use?

I’m not super clear on why transformations are even ok to do if they fundamentally change the shape of the data. You had a good sentence right at the end (second to last slide?) – “for every change in x, there’s a (…) change in longevity squared” or something like that? I think it’d be helpful to repeat or elaborate on that.

I’m still not very clear on how to identify outliers vs high leveraged data points by looking at a plot alone.

What is the reasoning behind why we usually want to transform the independent variable instead of the dependent?

This is more about interpretations in multiple linear models. We can have a model with multiple variables as covariates (so multiple X’s on the right side of the equation). If we transform Y, then all the interpretations with every covariate changes. If we can fit model assumptions better with a transformation to one X, then we only change one interpretation!

In the Tukey’s power ladder, is log(x) mean log10(x) or natural log(x)? |

Extra materials with examples of transformations

https://www.youtube.com/watch?v=HIcqQhn3vSM&ab_channel=jbstatistics